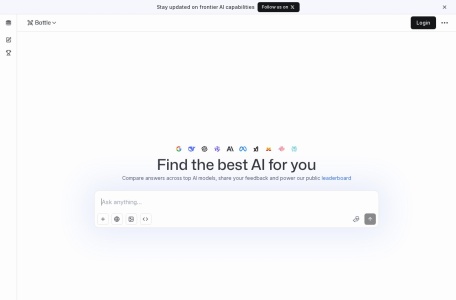

Based on the provided sources and other available information, LMArena (also known as LMSYS Chatbot Arena) is a prominent online platform for evaluating, comparing, and interacting with various large language models (LLMs) through a unique, user-driven “arena” system.

📊 What is LMArena?

| Aspect | Description |

|---|---|

| Core Concept | A free, accessible platform allowing users to test and compare different AI models side-by-side through text, dialogue, image generation, and code requests. |

| Origin | Originally created in 2023 as a research project by teams from UC Berkeley and Carnegie Mellon University. |

| Primary Method | A blind-test “arena”: users submit a prompt, and two anonymous models generate responses. The user then votes for the better answer, and the results feed into a public leaderboard. |

| Business Status | As of early 2026, it has evolved into a high-profile startup, having raised $150 million at a $1.7 billion valuation. |

🎮 Key Features and User Experience

The platform is designed for both casual experimentation and serious model comparison.

Three Main Modes: The interface offers Battle Mode (blind test), Side-by-Side Mode (user-select models), and Direct Chat Mode (single-model interaction).

Real-Time Leaderboards: Models are ranked using an Elo rating system based on user votes, creating a constantly updated “power ranking” visible on the site.

Free and Low-Barrier Access: Most functions require no registration, making it a popular tool for quick AI testing and comparison.

🏆 Impact as an Evaluation Benchmark

LMArena’s leaderboard has become an influential, albeit controversial, benchmark in the AI industry.

Industry Recognition: It is frequently cited as an “international authoritative large model ranking list” in media and corporate announcements.

Driving Competition: Major AI labs, including OpenAI, Google, Anthropic, and leading Chinese companies, actively submit their models to be ranked, using the results for marketing and research validation.

⚠️ Major Controversies and Criticisms

Despite its popularity, LMArena’s methodology faces significant scrutiny.

| Criticism | Details |

|---|---|

| Quality of User Voting | An analysis by data firm Surge AI found that 52% of winning answers on the platform were factually incorrect, suggesting voters often prefer longer, well-formatted, or confident-sounding answers over correct ones. |

| “Gaming the System” | Some companies have been accused of submitting specially optimized versions of their models to the arena that are tailored to win votes (e.g., using verbose, embellished responses) rather than reflect the publicly available model’s performance. |

| Debate Over Fairness | The platform’s reliance on untrained, anonymous user votes has led to debates about whether it measures true model capability or merely “popularity” and presentation, challenging its role as a definitive benchmark. |

💎 Conclusion

LMArena successfully democratized AI model evaluation through an engaging, game-like interface and became a key industry reference point. However, its core reliance on unsupervised human voting has introduced significant challenges regarding evaluation quality and fairness. It stands as a pivotal yet contentious platform that reflects the broader difficulties in objectively measuring AI intelligence.

data statistics

Relevant Navigation

Qwen (通义千问)

Zhipu AI(Z.ai)

Hugging Face

Zapier: Automate AI Workflows

Make (Integromat)

DeepSeek

Coze